You just told an AI to come up with ten marketing headlines. The answer came right away and was great. It felt like magic, light, and clean. But behind that one question is an industrial process that uses a lot of energy and water. It turns out that the cloud has a very real and growing impact on the environment. We’re just now realizing how much our convenience costs us.

It’s not just about training big models. It’s about the billions of requests that are handled every day. There is a hidden engine behind the generative AI revolution that uses a lot of resources. Let’s open the curtain.

The Triple Threat: Computing, Cooling, and Carbon

Generative AI doesn’t just “think.” It does math. In a hurry. To do trillions of operations, each response needs a data center full of special chips. This needs a lot of power. So, this power has to come from somewhere.

That source is often fossil fuels. The result is a big carbon footprint. But the problem doesn’t end with emissions.

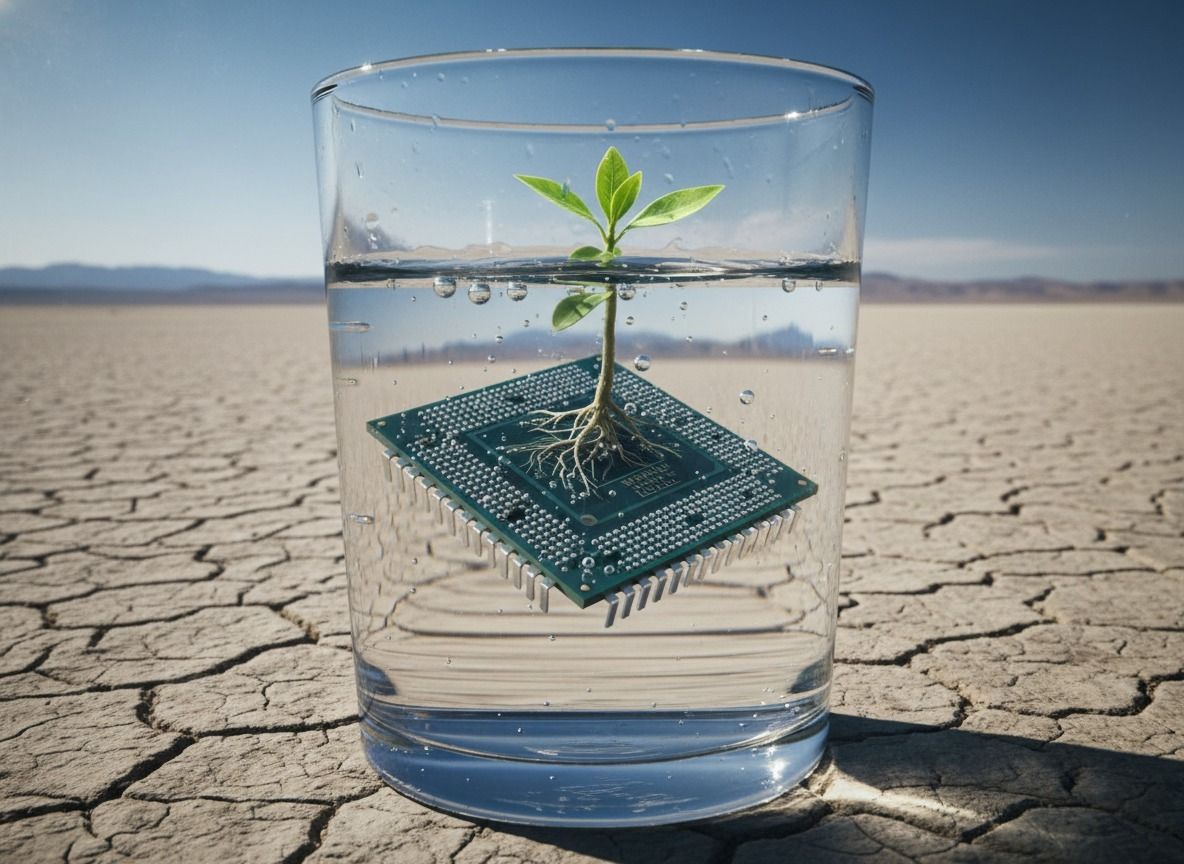

Those powerful chips get really hot. They need to be kept cool all the time. Evaporative cooling systems are common in big data centers. These systems use millions of gallons of water. This is a triple threat to computers, carbon, and water.

Think of it as a tax that you can’t see on every digital interaction.

The Inference Problem: Beyond the Training Hype

We hear a lot about how expensive it is to train models. For example, it was thought that training a model like GPT-3 would release more than 550 tons of CO2. That will get people’s attention. This focus, on the other hand, is wrong.

Inference is the real, never-ending challenge.

Using the trained model is called inference. It’s the billions of conversations and pictures that people make with ChatGPT and Midjourney every day. Training makes the factory. Inference keeps that factory running all day, every day. As adoption rises, inference’s total effect is now the biggest environmental cost.

In its 2023 Environmental Report, Google confirmed this. The company said that the amount of energy used by data centers had gone up by almost 50%. It said that the rise was directly linked to the use of AI in all of its products. They can’t keep up with this growth in their ability to decarbonize.

The Shocking Need for Large Language Models

Let’s talk about H2O. This is probably the part of AI’s footprint that gets the least attention. A data center doesn’t just use electricity. It needs a lot of water to stay cool.

Scientists are beginning to put numbers on this. A recent study from UC Riverside gave a shocking example. They thought that a simple conversation with a big model, like 20 to 50 prompts, could use up half a liter of fresh water. That’s a whole water bottle for a short talk.

This isn’t just a number. It has effects in the real world.

Think about Microsoft’s big plans to build data centers in Arizona. The state is going through a megadrought that has never happened before. This makes a big difference between progress in technology and a lack of basic resources. People in the area are right to be asking questions.

Microsoft’s Arizona Problem: A Case Study

Microsoft wants to build a few new data centers in Goodyear, Arizona. Of course, these facilities will be able to handle cloud and AI workloads. But Arizona has put strict limits on water use because there is not enough of it.

The business has promised to be “water positive” by the year 2030. This means that it plans to put back more water than it uses. But the immediate effect on the local area is a real worry. This situation perfectly sums up the main problem in the industry.

How do we make sure that global digital progress doesn’t hurt the environment in our own communities? It’s not easy to find answers here. Arizona is a small part of a big problem.

Finding the Way to Greener AI

The good news is that the industry is moving forward. There is a growing movement toward “Green AI.” The goal is to get better results with fewer resources.

The strategies are many-sided:

- Efficient Hardware: Google’s TPUs and other new chips are made just for AI workloads. They do more calculations for every watt of energy.

- More intelligent algorithms: Model quantization and distillation are two methods that make models smaller and faster. They keep most of the intelligence of their bigger cousins.

- Carbon-Aware Computing: This is a game-changer. Companies can plan big AI training jobs for times when there is a lot of solar or wind power on the grid. It’s a simple change that works very we

Google used its DeepMind AI to make the cooling in its data centers work better. This led to a 40% drop in the amount of energy needed to cool. It’s a strong proof of concept.

The Efficiency Paradox: An Expert’s View

I talked to Dr. Anya Sharma, who is an energy informatics researcher at Stanford. She gave a very important, nuanced point of view.

Dr. Sharma said, “We’re stuck in an efficiency paradox.” “We make models 50% more efficient, but then we send them to a user base that grows by 200%. The net effect is still a huge rise in the use of all resources. “We’re both champions of efficiency and failures of consumption.”

This information is very important. This means that just fixing things with technology isn’t enough. We need to change the way we think about and use these powerful AI tools.

A Call to Create and Consume with Care

So, what does this mean for us? The genie is out of the bottle. We can’t ignore how powerful AI could be. But we need to build it and use it in a responsible way.

This means that developers should make efficiency a top priority. For businesses, it calls for complete openness in environmental reporting. For us, the users, it calls for a new kind of mindfulness.

Do you have to make 100 pictures to find the right one? Maybe not. We need to change the way we think about digital abundance from “there’s so much of it, it’s free” to “I value and use it on purpose.”

Keep in mind that AI tools have a small but real weight the next time you use one. We shouldn’t build the future of AI on the backs of the planet it’s supposed to help. We don’t have much time to get this right. Let’s not let it go to waste.